Customers of RealityServer are usually seeking out the highest quality visuals possible. However in many contexts it is not economical to provide this quality at all times. As a result, hybrid solutions using both WebGL and RealityServer have become popular. Here, WebGL is used during most of the interaction and RealityServer just at the end of the process for a final high quality image. The problem this creates is that you have to make the WebGL version of the content somehow, ideally without making your content twice. In this article we’ll see how glTF 2.0, MDL materials and distillation let you repurpose your content automatically.

RealityServer can rapidly produce images of stunning realism, even interactively in many cases. However in certain use cases, particularly consumer facing ones, it is often not possible to dedicate the server-side resources needed to service the demand. A useful strategy to reduce the requirements is to utilise client-side rendering technologies like WebGL for most of the visuals, reserving RealityServer for final higher quality results.

This can be a very effective solution, particularly when combined with the new queuing features in RealityServer. However you’ve likely already setup all of your content for higher end rendering with complex materials. Repurposing this for WebGL can mean redoing all of your materials in addition to simplifying the geometry for lower end devices. We’ll leave simplifying the geometry to another day, in this article we are going to tackle the problem of materials.

Different software and technologies define materials in many different ways. There is no one right solution and navigating this landscape can be pretty challenging. Right now we are concerned with getting our RealityServer scenes rendering nicely in WebGL so let’s focus there.

RealityServer represents all materials using NVIDIA MDL (Material Definition Language). This provides an extremely rich set of components from which complex, physically accurate materials can be created. At times this can involve connecting these components with complex relationships, procedural functions, texture lookups and many other features. This is referred to as a Component Approach of material representation.

In contrast, file formats such as glTF 2.0 typically use a fixed material model with a set of pre-defined parameters designed to account for all possible materials you’d like to represent in advance. While texturing helps expand the usefulness of this approach, it can only represent materials possible to capture in this fixed model. This is usually referred to as the Ubershader Approach or Monolithic Approach of material representation.

Since these approaches are fundamentally different one might expect a means of automatically going from one to the other to be impossible. While it is true that you cannot guarantee that you can represent exactly something built using a component approach in a particular ubershader based material, we’ll see below that there is a solution that gives you good approximation.

So, you’ve setup all of your content for RealityServer with high end materials using MDL for rendering in Iray on your server. Now you want to create a reasonable approximation of your content for use in WebGL, for example by exporting glTF 2.0 data. How can you map those materials over to the much simpler glTF PBR material model?

Enter the MDL Distiller. Using the Distiller allows you to move arbitrary MDL materials into the Ubershader world through a process called distillation. This process takes the MDL material and creates a simplified version for you automatically. It offers a variety of target models for the simplification and the Distiller will always try to make a reasonable effort to ensure the simplified material, to the extent possible, looks like the original.

Currently five target models are supported, diffuse, diffuse_glossy, specular_glossy, ue4 and transmissive_pbr. Obviously some of these will better be able to represent complex materials than others but the different targets allow even extremely limited systems to have materials that give at least the same general appearance as the original materials.

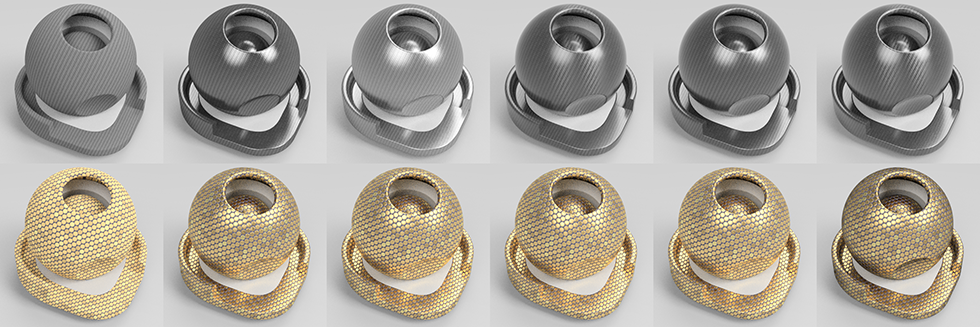

In the image above you can see the full MDL material on the very right side and then distilled versions with different targets. On the very left you can see the diffuse only target. Of course this is never going to look like the original but it does surprisingly well at at least giving a similar impression. For the other models you can see the results are much closer with the differences being much more subtle.

After distilling a material, it may still have a complex graph of texture lookup and procedural functions so a second step, baking is applied to convert these graphs into standard texture maps which can be used with the target material. So it is a two step process, first simplify the material model and then bake all of the inputs to either constants or textures.

We have actually had a command (mdl_distill_material) in RealityServer to take advantage of this for some time. This allows you to distill individual materials in a highly customised way, however what we want to talk about here is applying this to automatic creation of assets for potential use outside RealityServer.

Starting with RealityServer 5.3, build 2593.229, we’ve added options for automatic material distillation during export for the Assimp based exporters. While this will do something useful for other formats like FBX and so on, where it really shines is in converting RealityServer content to glTF 2.0 for use on the web. Here is a quick example.

By distilling the scenes MDL materials to the specular_glossy target we can create materials suitable for use with the popular KHR_materials_pbrSpecularGlossiness extension of glTF. We also capture some other properties that glTF supports outside the material model such as emission and cutouts.

To export your scene using distilling you need to pass some extra exporter options. In some cases just turning on the distill_materials option will be enough but you’ll likely also want to customise the options used for baking out textures where needed. Here is a quick example.

{"jsonrpc": "2.0", "method": "export_scene", "params": {

"scene_name": "example_scene",

"export_options": {

"distill_materials": true,

"baker_options": {

"baker_gpu_id": 0,

"baker_resolution": {

"x": 1024,

"y": 1024

},

"baker_resource": "gpu_with_cpu_fallback",

"baker_samples": 16,

"baker_format": "png",

"quality": "100",

"gamma": 2.2

}

},

"filename": "scenes/web_ready.gltf"

}, "id": 1}

As you can see you can control the resolution of the baking as well as output format and the resource used for baking (this can be GPU accelerated). Note that baking is not doing lighting calculation so typically a very small number of samples are needed and this will depend more on the detail within the resulting baked texture. The resolution is the main thing you typically want to control as these textures need to be downloaded when viewing. Note that the quality parameter is not the quality of the baking but just the quality for the image file format (if supported).

While you can explicitly give a format for the results to be stored in, if the baker is generating information that requires alpha channels (for example the combined specular glossiness map or cutouts) then it may switch to use PNG automatically regardless of what you specify. You shouldn’t change the gamma setting unless you really know what you are doing. The exporter will embed the textures within the glTF file for you during export.

While a great start and usable for many customers there are some limitations with the current approach. The two main ones are that texture transforms are not supported, so right now you have to use fully UV mapped models. We have recently added KHR_texture_transform support for our glTF importer but it is not currently available in the exporter.

This is related to another limitation which is that the distiller technology itself (more specifically the baking part) does not deal with UV transformations applied to materials. The issue here is that any transformations are baked into the output, which will likely result in tileable textures no longer being tileable. There isn’t a general solution to this since in MDL you can have multiple arbitrary UV transforms for every texture lookup and it is not possible to create a baked texture that remains tilealbe while accounting for this when targetting a single texture.

Since glTF does not support multiple textures or texture graphs for the same property, these types of situations cannot be readily converted to glTF in an automated way. As a result, if you think you will want to use your RealityServer content in glTF, you may want to go for fully UV mapped models when creating them.

In this article we’ve only talked about a solution for materials. Of course you don’t want to take your nice multi-million triangle model from RealityServer, convert it to glTF and just throw it on a server. This would result in long download times and for more limited devices even an inability to view the file.

There are several options available for dealing with geometry simplification. Two popular ones are RapidCompact by the Darmstadt Graphics Group and InstaLOD. These tools allow you to greatly simplify model geometry while retaining important properties such as UV coordinates and surface normals. We’ll explore some of these options in a future article.

At the start of the year we began introducing glTF 2.0 related features to RealityServer, beginning with support for importing glTF content with their PBR materials. Customers have widely adopted this as a great way to get content into RealityServer and we have been iterating on this support ever since. This is only the start of the process in supporting glTF 2.0 for export and before distillation we didn’t have a good answer for how to handle arbitrary MDL materials. We hope to continue improving both the import and the export features and are always interested in hearing about what you’d like to see next so get in touch and share your ideas.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.